Researchers at giant-tech companies are working on new ways to understand people’s minds better, but to what extent?

Have you ever wondered what would happen if someone could read your thoughts? Well, several researchers from different institutions are working with AI to do just that, each with their own method.

As technology continues to advance day by day, scientists may soon be able to read minds with such accuracy that could potentially reveal what the person is thinking or seeing, and even why they might be predisposed to mental diseases like schizophrenia and depression.

In fact, similar technology is already in use to diagnose and treat a variety of disorders by better understanding how brains function.

For example, it is currently being used to allow surgeons to plan how to operate on brain tumors while preserving as much good tissue as possible.

Additionally, it has allowed psychologists and neurologists to map relationships between various brain regions and cognitive processes like memory, language, and vision.

But now, experts are opening further doors for AI to continue to advance.

Reading brainwaves

The latest attempt was by Meta, Facebook’s parent company, and revolves mainly around hearing.

On August 31, experts from the company revealed that their newly developed artificial intelligence (AI) can ‘hear’ what people are hearing by simply analysing their brainwave activity.

The study, which is currently in its very early phases, aims to serve as a building block for technology that can aid those with traumatic brain injuries who are unable to communicate verbally or via keyboard. If successful, scientists can capture brain activity without surgically inserting electrodes into the brain to record it.

“There are a bunch of conditions, from traumatic brain injury to anoxia [an oxygen deficiency], that basically make people unable to communicate. And one of the paths that has been identified for these patients over the past couple of decades is brain-computer interfaces,” Jean Remi King, a research scientist at Facebook Artificial Intelligence Research (FAIR) Lab, told TIME.

One of the ways scientists used to enable communication is by placing an electrode on the motor regions of the patient’s brain, King explained. However, such a technique can be highly invasive, thus why his team is working to adopt other ‘safer’ methods.

“We, therefore, intended to test using non-invasive brain activity recordings. The objective was to create an AI system that can decode the brain’s reactions to stories that are uttered.”

As part of the experiment, researchers made 169 healthy adults listen to stories and words read aloud while having various equipment (such electrodes stuck to their heads) monitor their brain activity.

In an effort to uncover patterns, researchers then loaded the data into an AI model. Based on the electrical and magnetic activity in participants’ brains, they wanted the algorithm to “hear” or ascertain what the participants were listening to.

Though the experiment was successful as a starting point, King said that his team stumbled upon two main challenges, accuracy and sharpness.

“The signals that we pick up from brain activity are extremely “noisy.” The sensors are pretty far away from the brain. There is a skull, there is skin, which can corrupt the signal that we can pick up. So picking them up with a sensor requires super advanced technology,” he added.

The other challenge, the expert said, is understanding how the brain represents language to a large extent. He said that “even if we had a very clear signal, without machine learning, it would be very difficult to say, “OK, this brain activity means this word, or this phoneme, or an intent to act, or whatever.”

The goal in the next step, King added, is learning how to match representations of speech and representations of brain activity and assigning both to an AI system.

Reading minds

While Meta’s study revolves around reading brainwaves, researchers at Radboud University in the Netherlands are aiming for a more visual result.

According to Nature, the world’s leading multidisciplinary science journal, the experts are working on a “mind-reading” technology that will enable them to capture photographs from a person’s brainwaves.

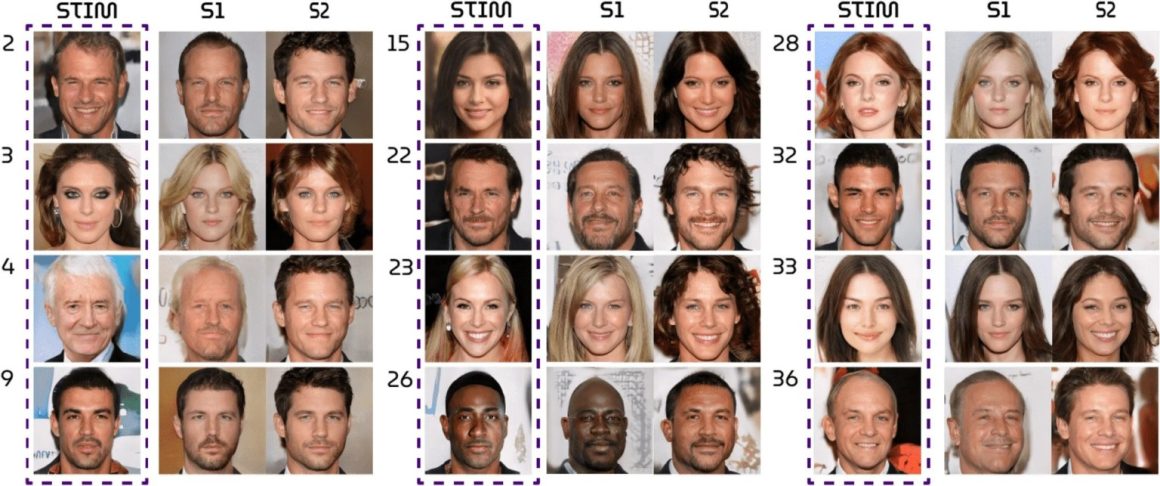

To test the AI technology, researchers showed volunteers pictures of random faces while they were inside a functional magnetic resonance imaging (fMRI)— a kind of non-invasive brain imaging device that measures variations in blood flow to identify brain activity.

The fMRI then monitored the activity of neurons in the volunteers’ vision-related brain regions as they viewed the images of faces. The data was then fed to the artificial intelligence (AI) programme, allowing it to create an accurate image using the data from the fMRI scan.

The experiment’s findings demonstrate that the fMRI/AI system was able to nearly recreate the original visuals that the volunteers were shown accurately.

The study’s principal investigator Thirza Dado, an AI researcher and cognitive neuroscientist, told the Mail Online that these ‘impressive’ results show the possibility for fMRI/AI systems to successfully read minds in the future.

“I believe we can train the algorithm not only to picture accurately a face you’re looking at, but also any face you imagine vividly, such as your mother’s,” Dado explained.

“By developing this technology, it would be fascinating to decode and recreate subjective experiences, perhaps even your dreams,” Dado says. “Such technological knowledge could also be incorporated into clinical applications such as communicating with patients who are locked within deep comas.”

The expert said that the research focuses on creating technology that could help those who have lost their vision due to illness or accident, regain it.

“We are already developing brain-implant cameras that will stimulate people’s brains so they can see again,” Dado added.

The volunteers had previously been exposed to a variety of other faces while having their brains scanned in order to “train” the AI system.

The key, according to the experiment, is that the photographic images they saw weren’t of actual people at all, but rather computer-generated paint-by-numbers images, where each small dot of light or darkness is assigned a special computer programme code.

The neuronal responses of the volunteers to these “training” images may be seen using the fMRI scan. The picture portrait was then recreated by the artificial intelligence system by translating each volunteer’s neural response back into computer code.